Why “intelligible pensions” is the tech question of our time

Ask any adviser or paraplanner what’s hardest to explain, and pensions will be near the top of the list. Between legacy schemes, tax quirks, retirement choices and regulation, even a “plain English” summary can sound like a puzzle.

So when I saw the headline “Could AI be the answer to making pensions intelligible for all?”– I stopped scrolling. Because if there’s one part of advice crying out for clarity, it’s pensions.

This month’s TechTalk takes that question and pairs it with three big tech moves in our sector – to explore how AI might finally help advice make senseto everyone.

1 | “Intelligible” isn’t simple – it’s structural

A recent Professional Adviser article hit the nail on the head: pension language isn’t confusing by accident. It’s confusing because the system itself is complex. AI can’t magic that away – but it canact as a translator between the system and the saver.

In practice, that could mean:

- Explaining scheme rules or benefits in plain, personal terms

- Letting users ask questions in their own words (and get answers that build confidence)

- Tailoring explanations to different literacy or knowledge levels

- Spotting when someone’s lost, and suggesting when to bring a human into the loop

We’re already seeing early versions of this – “pension specialist” chatbots that can interpret scheme documents and respond conversationally. It’s promising, but as always, the line between simplification and distortion is thin. Accuracy, oversight, and context are everything.

2 | AI is moving from experiment to infrastructure

If you look at what’s happening across financial services, the pattern is clear – AI isn’t just a novelty anymore. It’s being built into the backbone of how firms work.

- Legal & General × Microsoft are using AI to enhance client interactions and anticipate needs.

- AJ Bell + Dynamic Planner have integrated to remove manual handoffs – the groundwork AI depends on.

- Nucleus / Third Financial + Titan Wealth are taking it further with platform-as-a-service flexibility, giving firms room to plug in AI tools of their own.

The message? These aren’t pilot projects anymore. They’re early signs of AI becoming part of the advice architecture– less about shiny new tools, more about smoother, smarter systems.

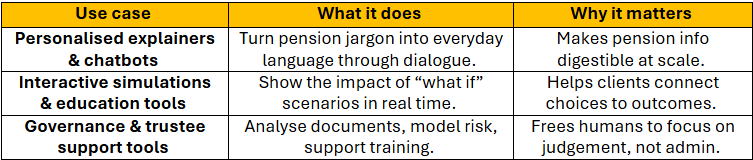

3 | Where AI is already proving useful

A few real-world examples show where AI is already earning its place:

But let’s not gloss over the caveats – hallucinations, data privacy, and regulatory limits still need careful handling. Used well, AI could raise standards. Used badly, it risks confusion at scale.

4 | Four questions to ask before you jump in

If you’re thinking about introducing AI to improve clarity, start by asking:

1️⃣ Where are clients getting stuck? Is it in benefit summaries, decumulation options, or tax explanations? Start where misunderstanding costs the most time.

2️⃣ Do your systems talk to each other? AI is only as smart as the data it can reach. If your tools aren’t connected, your AI will be guessing in the dark.

3️⃣ What’s your “human handover” rule? Decide early when a person should step in – and make sure that’s logged, not left to chance.

4️⃣ How will you measure understanding? Track feedback, rewording requests, and drop-off points. They’re gold dust for continuous improvement.

AI should assistadvice, not automate it. The moment clients feel “talked at” instead of “spoken with,” we’ve missed the point.

5 | The regulatory line is still forming

Right now, there’s a healthy debate about whether AI explanations could ever count as “advice.” The FCA’s watching closely – especially as the 2026 pensions dashboards start taking shape.

Experts are already urging boards to build AI governance policies: define your scope, set guardrails, and make sure accountability stays human. The PLSAhas echoed that message – cautious optimism, yes, but oversight must stay front and centre.

Final thoughts: yes – but only if we build it well

So, could AI make pensions intelligible for all? Yes – if we let it translate, not replace.

AI won’t remove complexity, but it can help bridge the gap between technical language and human understanding. Done right, it can make advice land– turning jargon into clarity, curiosity into confidence.

The real test isn’t whether AI can talk. It’s whether clients trust what it says.

If that’s the challenge your firm’s exploring, we’d love to hear how you’re approaching it – no pitch, just a shared curiosity about doing advice better.